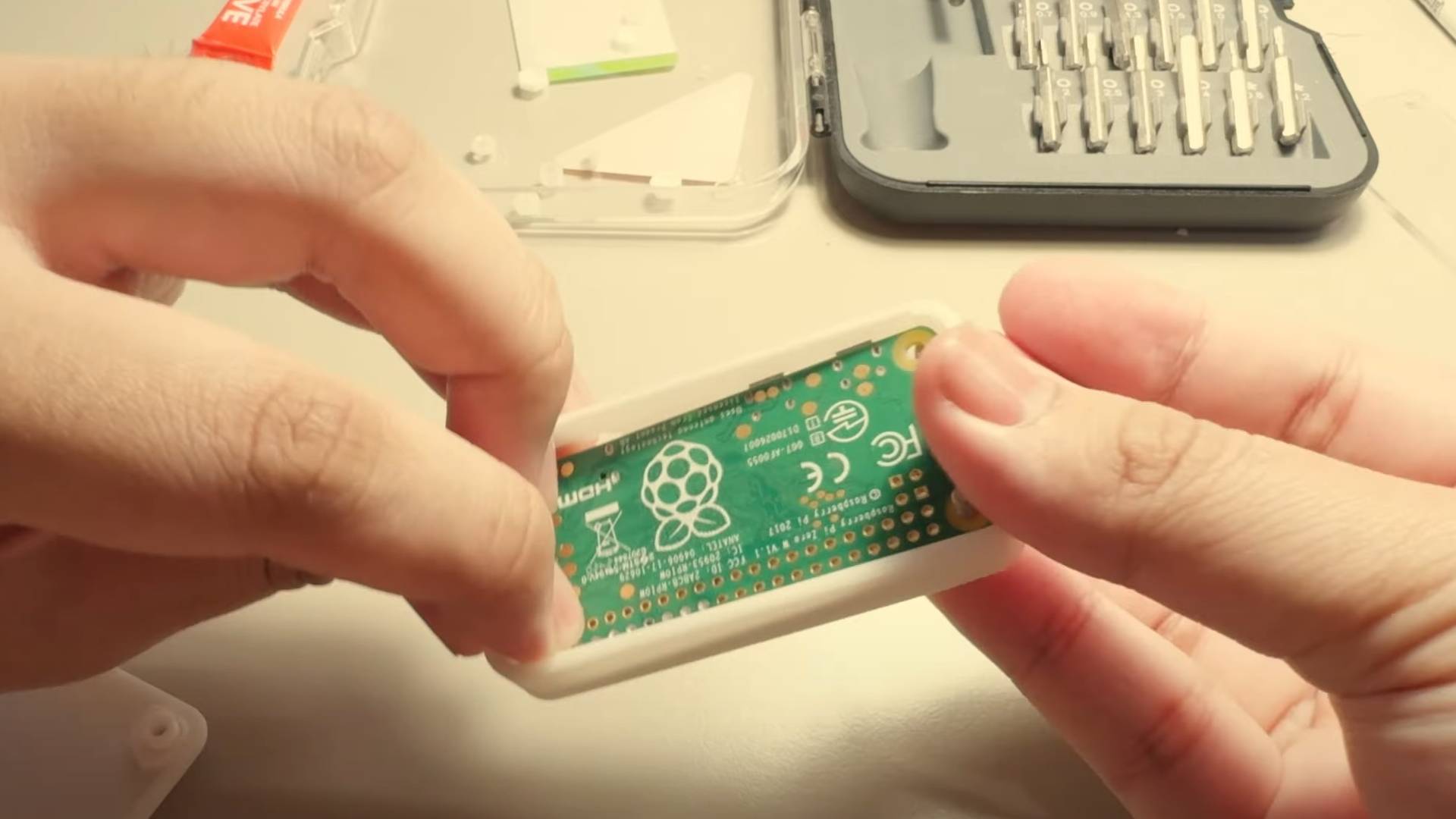

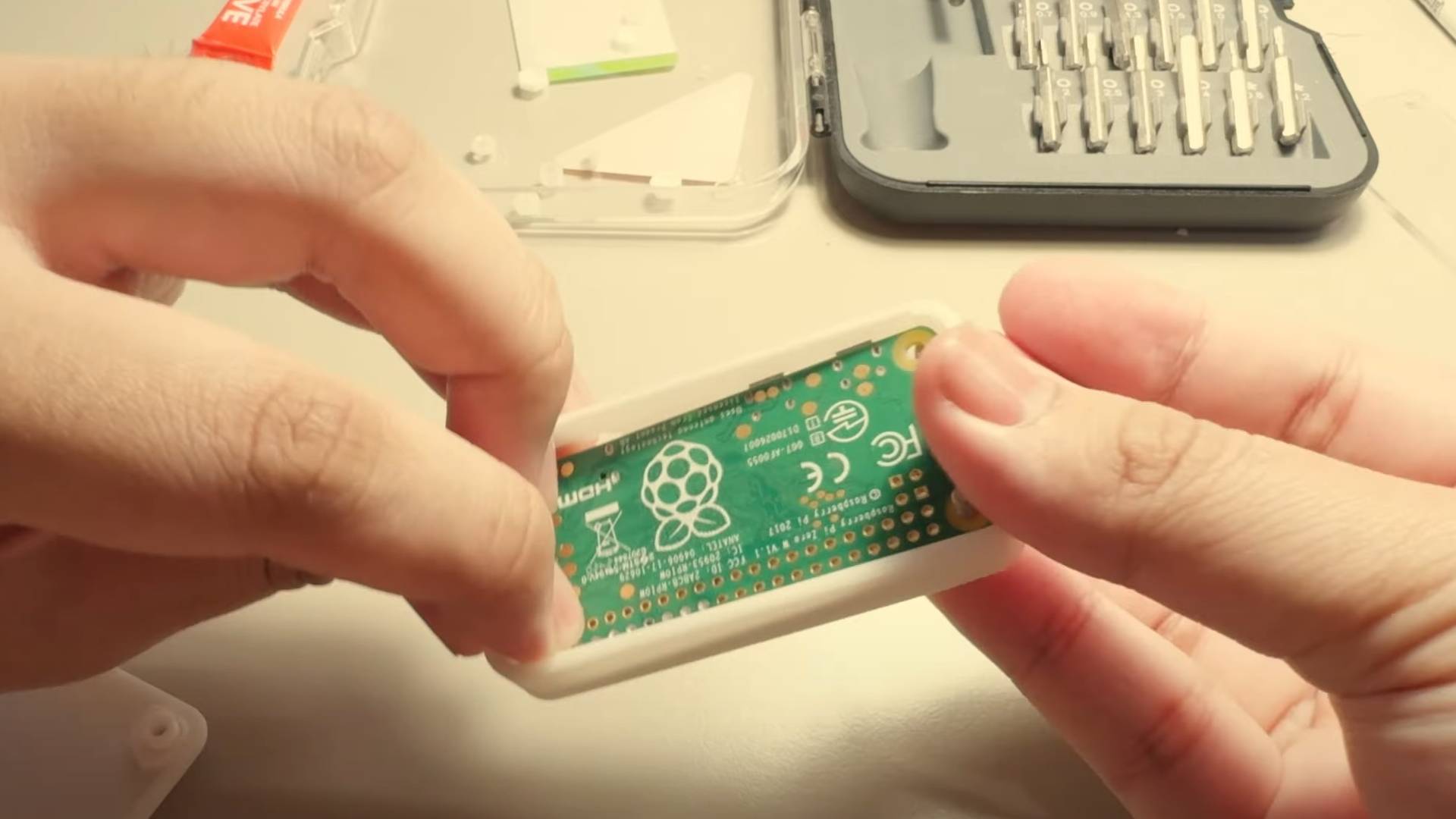

A Raspberry Pi Zero can run a local LLM using llama.cpp. But, while functional, slow token speeds make it impractical for real-world use.

© YouTube: Build with Binh

A Raspberry Pi Zero can run a local LLM using llama.cpp. But, while functional, slow token speeds make it impractical for real-world use.

© YouTube: Build with Binh